Jupyter Notebook 1: Getting started with Data Parallel Extensions for Python¶

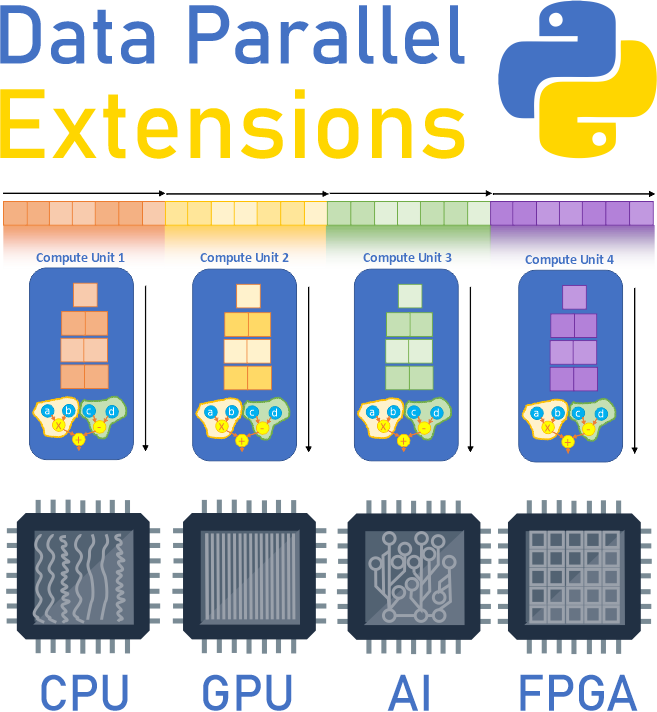

Data Parallel Extensions for Python allow you to run NumPy-like code beyond CPU using Data Parallel Extension for NumPy. It will also allow you to compile the code using Data Parallel Extension for Numba.

Modifying CPU script to run on GPU¶

In many cases the process of running Python on GPU is about making minor changes to your CPU script, which are: 1. Changing import statement(s) 2. Specifying on which device(s) the data is allocated 3. Explicitly copying data between devices and the host as needed

We will illustrate these concepts on series of short examples. Let’s assume we have the following NumPy script originally written to run on CPU, which is nothing more than creating two matrices x and y and performing matrix-matrix multiplication with numpy.matmul(), and prining the resulting matrix:

[1]:

# CPU script for matrix-matrix multiplication using NumPy

# 1. Import numpy

import numpy as np

# 2. Create two matrices

x = np.array([[1, 1], [1, 1]])

y = np.array([[1, 1], [1, 1]])

# 3. Perform matrix-matrix multiplication

res = np.matmul(x, y)

# 4. Print resulting matrix

print(res)

[[2 2]

[2 2]]

As stated before in many cases to run the same code on GPU is a trivial modification of a few lines of the code, like this:

[2]:

# Modified script to run the same code on GPU using dpnp

# 1. Import dpnp

import dpnp as np # Note, we changed the import statement. Since dpnp is a drop-in replacement of numpy the rest of the code will run lik regular numpy

# 2. Create two matrices

x = np.array([[1, 1], [1, 1]])

y = np.array([[1, 1], [1, 1]])

# 3. Perform matrix-matrix multiplication

res = np.matmul(x, y)

# 4. Print what's going on under the hood

print(res)

print("Array x is allocated on the device:", x.device)

print("Array y is allocated on the device:", y.device)

print("res is allocated on the device:", res.device)

[[2 2]

[2 2]]

Array x is allocated on the device: Device(level_zero:gpu:0)

Array y is allocated on the device: Device(level_zero:gpu:0)

res is allocated on the device: Device(level_zero:gpu:0)

Let’s see what we actually changed.

Obviously we changed the import statement. Now we import

dpnp, which is a drop-in replacement for a subset ofnumpythat extends numpy-like codes beyond CPUNo change in matrix creation code. How does

dpnpknow that matricesxandyneed to be allocated on GPU? This is because, if we do not specify the device explicitly, the driver will use the default device, which is GPU on systems with installed GPU drivers.No change in matrix multiplication code. This is because

dpnpprogramming model is the Compute-Follows-Data. It means thatdpnp.matmul()determines which device will execute an operation based on where array inputs are allocated. Since our inputsxandyare allocated on the default device (GPU) the matrix-matrix multiplication will follow data allocation and execute on GPU too.Note, arrays

x,y,resall have thedeviceattribute by printing which we make sure that all inputs are indeed on the GPU device, and the result is also on the GPU deivice. To be precise the data allocation (and execution) happened on GPU device 0 through Level-Zero driver.

More on data allocation and the Compute-Follows-Data¶

Sometimes you may want to be specific about the device type, not relying on the default behavior. You can do so by specifying the device in a keyword arguments for dpnp array creation functions and random number generators.

[3]:

import dpnp as np

a = np.arange(3, 30, step = 6, device="gpu")

dpnp.arange() is the array creation function that has optional keyword argument device, using which you can specify the device you want data to be allocated on with filter selector string. In our case the string specifies device type "gpu". The proper way of handling situations when the specified device is not available would be as follows:

[4]:

import dpnp as np

try:

a = np.arange(3, 30, step = 6, device="gpu")

print("The a is allocated on the device:", a.device)

except:

print("GPU device is not available")

# Do some fallback code

# Do reduction on the selected device

y = np.sum(a)

print("Reduction sum y: ", y) # Expect 75

print("Result y is located on the device:", y.device)

print("y.shape=", y.shape)

The a is allocated on the device: Device(level_zero:gpu:0)

Reduction sum y: 75

Result y is located on the device: Device(level_zero:gpu:0)

y.shape= ()

Note that y is itself a device array (not a scalar!), its data resides on the same device as input array a.

Advanced data and device control with Data Parallel Control library dpctl¶

Data Parallel Control library, dpctl, among other things provide advanced capabilities for controling devices and data. Among its useful functions is dpctl.lsplatform(verbosity), printing information about the list of available devices on the system with different levels of verbosity:

[5]:

import dpctl

dpctl.lsplatform() # Print platform information

Intel(R) OpenCL HD Graphics OpenCL 3.0

Intel(R) Level-Zero 1.3

Using a different verbosity setting to print extra meta-data:

[6]:

import dpctl

dpctl.lsplatform(2) # Print platform information with verbocitz level 2 (highest level)

Platform 0 ::

Name Intel(R) OpenCL HD Graphics

Version OpenCL 3.0

Vendor Intel(R) Corporation

Backend opencl

Num Devices 1

# 0

Name Intel(R) UHD Graphics 620

Version 31.0.101.2111

Filter string opencl:gpu:0

Platform 1 ::

Name Intel(R) Level-Zero

Version 1.3

Vendor Intel(R) Corporation

Backend ext_oneapi_level_zero

Num Devices 1

# 0

Name Intel(R) UHD Graphics 620

Version 1.3.0

Filter string level_zero:gpu:0

You can also query whether system has GPU devices and retrieve respective device objects:

[7]:

import dpctl

import dpnp as np

if dpctl.has_gpu_devices():

devices = dpctl.get_devices(device_type='gpu')

print(f"This system has {len(devices)} GPUs")

for device in devices:

device.print_device_info()

x = np.array([1, 2, 3], device=devices[0]) # Another way of selecting on which device to allocate the data

print("Array x is on the device:", x.device)

else:

print("GPU devices are not available on this system")

This system has 2 GPUs

Name Intel(R) UHD Graphics 620

Driver version 31.0.101.2111

Vendor Intel(R) Corporation

Filter string opencl:gpu:0

Name Intel(R) UHD Graphics 620

Driver version 1.3.0

Vendor Intel(R) Corporation

Filter string level_zero:gpu:0

Array x is on the device: Device(opencl:gpu:0)

The following snapshot illustrates how to select the default GPU device using dpctl and generate an array of random numbers on this device:

[8]:

try:

gpu = dpctl.select_gpu_device()

gpu.print_device_info() # print GPU device information

x = np.random.random(5, device=gpu) # Create array of random numbers on the default GPU device

print("Array x:", x)

print("Array x.device:", x.device)

except:

print ("No GPU devices available")

Name Intel(R) UHD Graphics 620

Driver version 1.3.0

Vendor Intel(R) Corporation

Filter string level_zero:gpu:0

Array x: [0.87253657 0.87415047 0.61092713 0.1395424 0.95248436]

Array x.device: Device(level_zero:gpu:0)

Or by creating GPU device object from the filter selector string:

[9]:

import dpctl

try:

l0_gpu_0 = dpctl.SyclDevice("level_zero:gpu:0")

l0_gpu_0.print_device_info()

except:

print("Cannot create the device object from a given filter selector string")

Name Intel(R) UHD Graphics 620

Driver version 1.3.0

Vendor Intel(R) Corporation

Filter string level_zero:gpu:0

The following snapshot checks whether a given device supports certain aspects, which may be important for the application, such as support for float64 (double precision) or the amount of available global memory on the device, etc.

[10]:

import dpctl

try:

gpu = dpctl.select_gpu_device()

gpu.print_device_info()

print("Has double precision:", gpu.has_aspect_fp64)

print("Has atomic operations support:", gpu.has_aspect_atomic64)

print(f"Global memory size: {gpu.global_mem_size/1024/1024} MB")

print(f"Global memory cache size: {gpu.global_mem_cache_size/1024} KB")

print(f"Maximum compute units: {gpu.max_compute_units}")

except:

print("The GPU device is not available")

Name Intel(R) UHD Graphics 620

Driver version 1.3.0

Vendor Intel(R) Corporation

Filter string level_zero:gpu:0

Has double precision: True

Has atomic operations support: True

Global memory size: 6284.109375 MB

Global memory cache size: 512.0 KB

Maximum compute units: 24

For more information about dpctl device selection please refer to Data Parallel Control: Device Selection

For more information about dpctl.SyclDevice class methods and attributes please refer to Data Parallel Control: dpctl.SyclDevice