Heterogeneous Computing¶

Device Offload¶

Python is an interpreted language, which implies that most of the Python script will run on CPU, and only a few data parallel regions will execute on data parallel devices. That is why the concept of the host and offload devices is helpful when it comes to conceptualizing a heterogeneous programming model in Python.

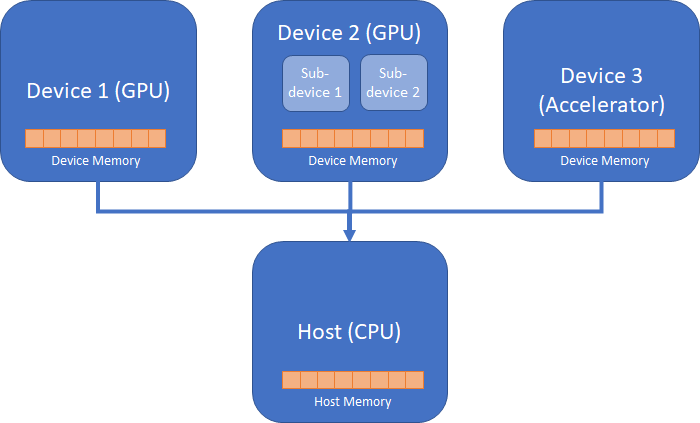

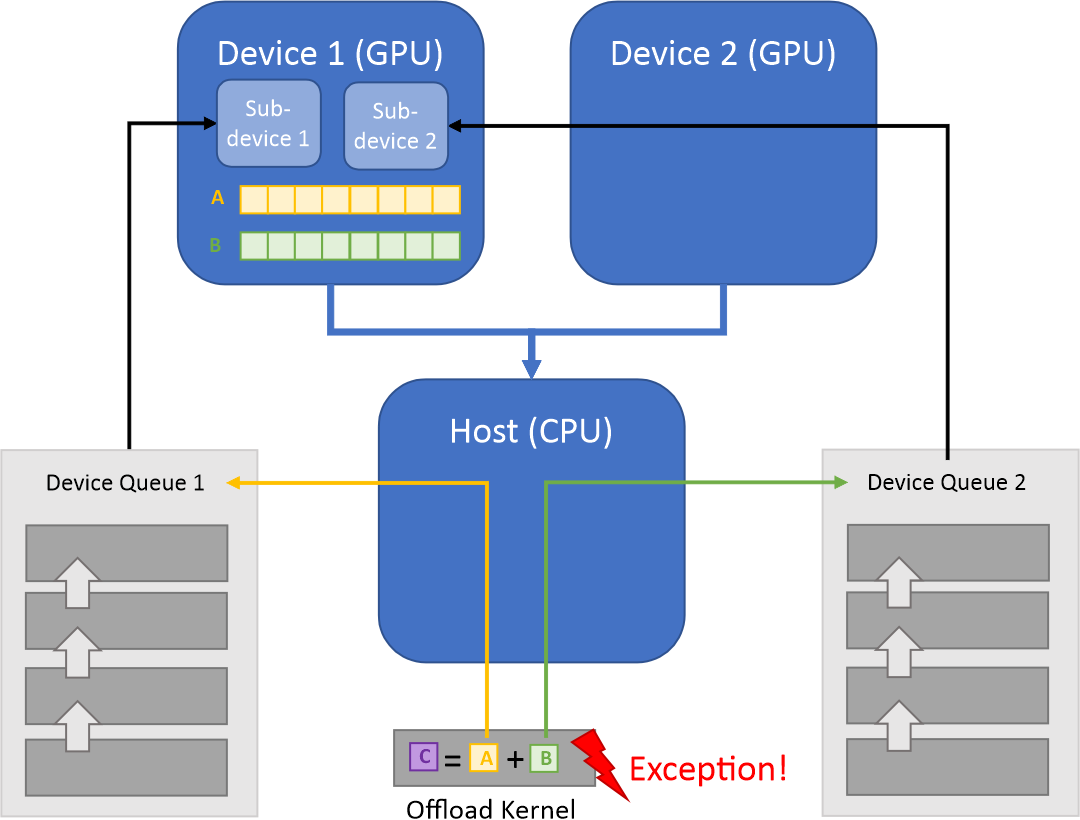

The above diagram illustrates the host (the CPU which runs Python interpreter) and three devices (two GPU devices and one attached accelerator device). Data Parallel Extensions for Python offer a programming model where a script executed by Python interpreter on the host can offload data-parallel kernels to a user-specified device. A kernel is the data-parallel region of a program submitted for execution on the device. There can be multiple data-parallel regions, and hence multiple offload kernels.

Kernels can be pre-compiled into a library, such as dpnp, or directly coded

in a programming language for heterogeneous computing, such as OpenCl* or DPC++ .

Data Parallel Extensions for Python offer the way of writing kernels directly in Python

using Numba* compiler along with numba-dpex, the Data Parallel Extension for Numba*.

One or more kernels are submitted for execution into a queue targeting an offload device. For each device, you can create one or more queues. In most cases, you do not need to work with device queues directly. Data Parallel Extensions for Python will do necessary underlying work with queues for you through the Compute-Follows-Data.

Compute-Follows-Data¶

Since data copying between devices is typically very expensive, for performance reasons it is essential

to process data close to where it is allocated. This is the premise of the Compute-Follows-Data programming model,

which states that the compute happens where the data resides. Tensors implemented in dpctl and dpnp

carry information about allocation queues, and hence, about the device on which an array is allocated.

Based on tensor input arguments of the offload kernel, it deduces the queue on which the execution happens.

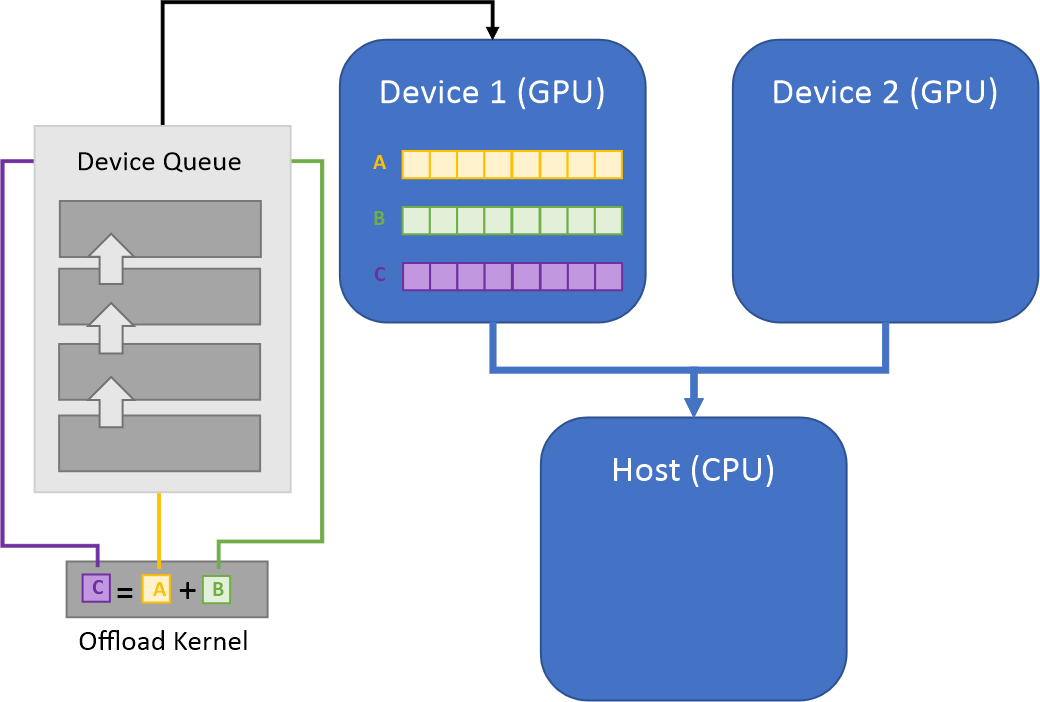

The picture above illustrates the Compute-Follows-Data concept. Arrays A and B are inputs to the

Offload Kernel. These arrays carry information about their allocation queue (Device Queue) and the

device (Device 1) where they were created. According to the Compute-Follows-Data paradigm

the Offload Kernel will be submitted to this Device Queue, and the resulting array C will

be created on the Device Queue associated with the Device 1.

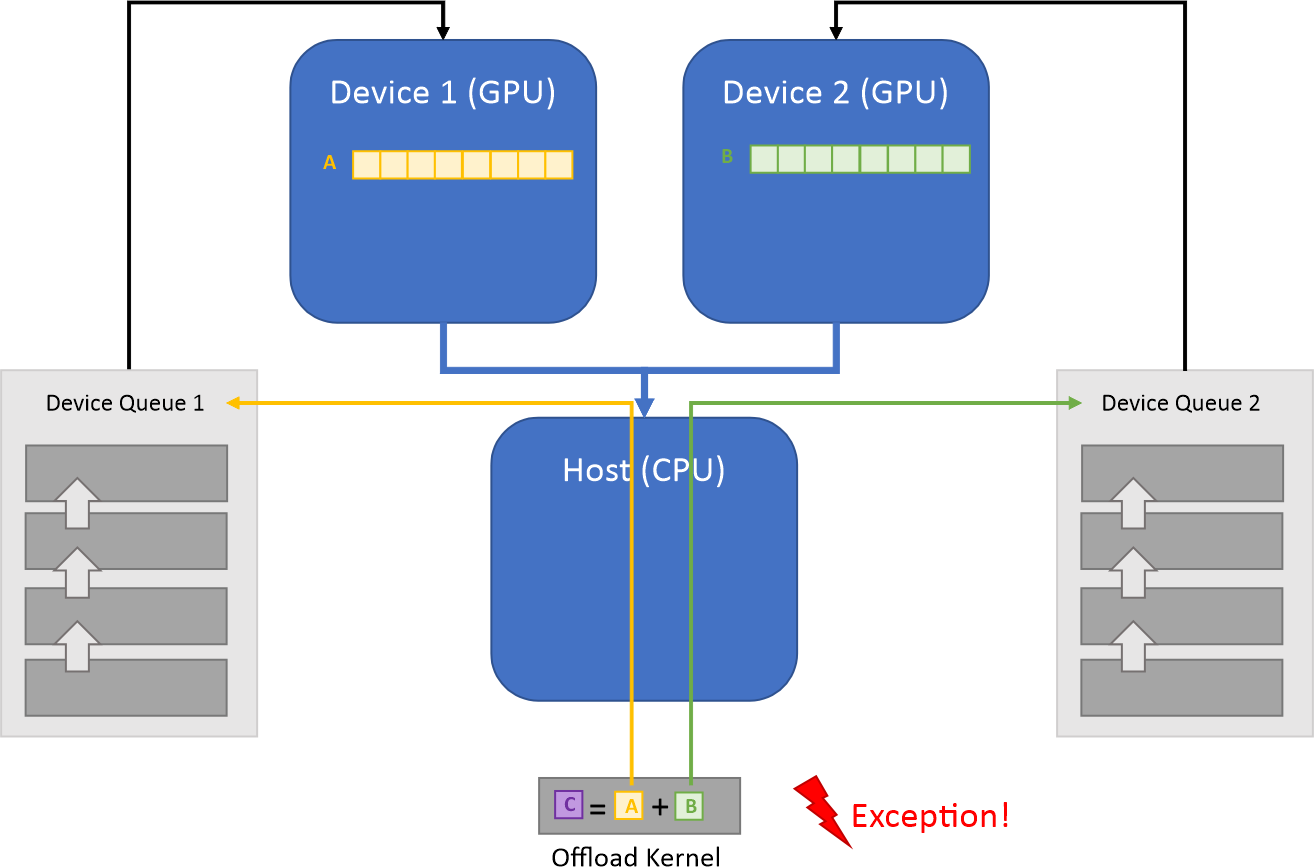

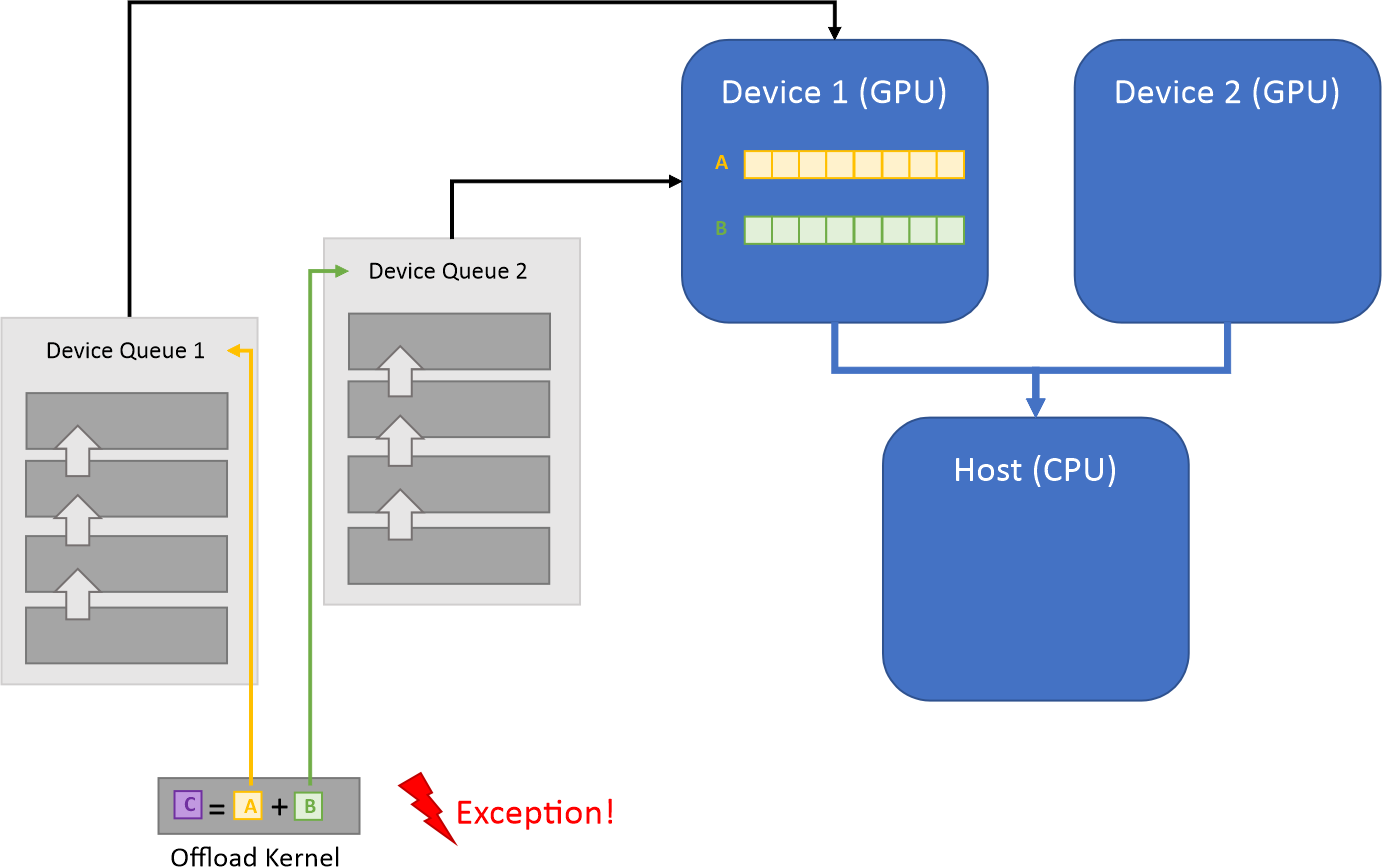

Data Parallel Extensions for Python require all input tensor arguments to have the same allocation queue. Otherwise, an exception is thrown. For example, the following usages will result in an exception.

Input tensors are on different devices and different queues. Exception is thrown.¶

Input tensors are on the same device, but queues are different. Exception is thrown.¶

Data belongs to the same device, but queues are different and associated with different sub-devices.¶

Copying Data Between Devices and Queues¶

Data Parallel Extensions for Python create one canonical queue per device. Normally,

you do not need to directly manage queues. Having one canonical queue per device

allows you to copy data between devices using the to_device() method:

a_new = a.to_device(b.device)

Array a is copied to the device associated with array b into the new array a_new.

The same queue is associated with b and a_new.

Alternatively, you can do this as follows:

a_new = dpnp.asarray(a, device=b.device)

a_new = dpctl.tensor.asarray(a, device=b.device)

Creating Additional Queues¶

As said before, Data Parallel Extensions for Python automatically creates one canonical queue per device, and you normally work with this queue implicitly. However, you can always create as many additional queues per device as needed and work explicitly with them, for example, for profiling purposes.

Read Data Parallel Control documentation for more details about queues.